| Social Network Spam and Author/Agent Rank Posted: 16 Sep 2011 04:14 AM PDT Posted by dohertyjf On Wednesday I presented at SMX on the panel called "Facebook, Twitter, and SEO". I was excited to speak alongside Horst Joepen (SearchMetrics), Jim Yu (BrightEdge), and Michael Gray (Atlas Web Services). In my talk, I showed some information from patents that talked about how a search engine might detect a person's topical relevance and authority and use a scale on which to pass link juice from their social shares or not. Let's explore some of these a bit more. What Factors Might Search Engines Look At? There are three concepts I would like to introduce you to. Topical Trustrank The first concept you should be familiar with is "topical Trustrank". The original Trustrank was first mentioned in this Yahoo patent from 2004. At the time, it seemed underdeveloped, since it relied on sites to label themselves. And worse than underdeveloped, it was open to spam since it relied on websites to tag themselves (not unlike the meta keywords tag). The patent was granted in 2009 as a way to rank sites based on labels given them by people, according to this article called Google Trustrank Patent Granted. Another take on Trustrank is Topical Trustrank, which was introduced in 2006. Because Trustrank seemed to be biased heavily towards larger communities that could attract more spam pages (without tripping a spam threshold, maybe?), Topical Trustrank aimed to build trust based on the relevance of the connecting sites (and I would argue now, the topical relevance of those sharing links via social networks). Author Rank According to one Yahoo patent application, "...author rank is a 'measure of the expertise of the author in a given area.'" Since this is delightfully vague, here are some specific areas (taken from Bill Slawski's How Search Engines May Rank User Generated Content) that the search engines might look at to determine if you are authoritative: - A number of relevant/irrelevant messages posted;

- Document goodness of all documents initiated by the author;

- Total number of documents initiated posted by the author within a defined time period;

- Total number of replies or comments made by the author; and,

- A number of [online] groups to which the author is a member.

We can take these and apply them to social as well. If they are calculating author rank based off of content taken from around the web, why would they not also use this author rank for your social shares? Here are some more questions a search engine might ask about a user (according to an email I received from Bill Slawski): - Do they contribute something new, useful, interesting?

- Are they tweeting new articles, or recycling old articles? Are they sharing articles from just one site, or are they sharing articles from a number of different sites? What's their engagement/CTR?

- Do they participate in meaningful conversations with others?

- Are they replying to others through @replies or others (DMs. maybe?)? What topics?

- Do those others contribute something new, useful, interesting?

- Are they themselves keeping the cycle going and replying to various others, or always responding to the same users?

Agent Rank According to this article from Search Engine Land, Google applied for a patent around a way to determine an agent, or author's, authority in a specific niche. According to the article: Content creators could be given reputation scores, which could influence the rankings of pages where their content appears, or which they own, edit, or endorse. Also according to the article, here are some of the goals of Agent Rank: - Identifying individual agents responsible for content can be used to influence search ratings.

- The identity of agents can be reliably associated with content.

- The granularity of association can be smaller than an entire web page, so agents can disassociate themselves from information appearing near the information for which the agent is responsible.

- An agent can disclaim association with portions of content, such as advertising, that appear on the agent’s web site.

- The same agent identity can be attached to content at multiple locations.

- Multiple agents can make contributions to a single web page where each agent is only associated to the content that they provided."

Does the following sound like the new rel=author markup that we're seeing in the search results? I think it does: "Tying a page to an author can influence the ranking of that page. If the author has a high reputation, content created by him or her many be considered to be more authoritative that similar content on other pages. If the agent reviewed or edited content instead of authoring it, the score for the content might be ranked differently." "An agent may have a high reputation score for certain kinds of content, and not for others – so someone working on site involving celebrity news might have a strong reputation score for that kind of content, but not such a high score for content involving professional medical advice." The article goes on to explain that authority scores will be hard to build up, but easy to harm. This would be one way to keep authors producing high quality content. Some more factors that may influence authority: - Quality of the response

- Relevance of the response

- The authority of those who respond to what you post

The Google Person Theory Here's a philosophical argument that I came up with that I like to call the Google Person Theory about how Google could determine author authority: - If you share articles frequently around a certain topic, you must be involved with that topic.

- If you are involved with that topic, you will also be writing about that topic.

- If you are writing about that topic, others will be sharing your writing on that topic.

- If others are sharing your writing on that topic, you must be authoritative about it.

- Therefore, articles you share within that same topic can be trusted (and potentially ranked higher).

According to Search Engine Land, "Authoritative people on Twitter lend their authority to pages they tweet. " ( source) How might search engines view my sharing and that of my followers? Two weeks ago Duane Forrester from Bing posted an interesting article showing how a they might visualize if someone is attempting to game their ranking signals by sharing a lot, or if the increased rise in sharing is natural. According to the Information Retrieval based on historical data (PDF) patent: A large spike in the quantity of back links may signal a topical phenomenon (e.g., the CDC web site may develop many links quickly after an outbreak, such as SARS), or signal attempts to spam a search engine (to obtain a higher ranking and, thus, better placement in search results) by exchanging links, purchasing links, or gaining links from documents without editorial discretion on making links. If we take "back links" and replace it with social shares, we get this: A large spike in the quantity of [social shares] may signal a topical phenomenon (e.g., the CDC web site may develop many links quickly after an outbreak, such as SARS), or signal attempts to spam a search engine (to obtain a higher ranking and, thus, better placement in search results) by exchanging [shares], purchasing [shares], or gaining [shares] from [others] without editorial discretion... If you are automatically tweeting every interesting article that comes your way, and you have a large network of people who do the same in an attempt to game the signals, here is the image of how Bing might view those manipulated ranking signals (the below is an example of a "Like Farm"). Check out all of the hubs on the image below:

And here is an image of non-manipulated, truly viral signals. Check out the wide scatter of sources: Some quick pieces of data to dissuade you from spamming or completely automating We hear a lot of talk around automating your social stream. This seems like an oxymoron to me, since it undercuts the whole purpose of "social" media. Here is an interesting statistical graph for you: Manual tweets get twice the clicks on average!

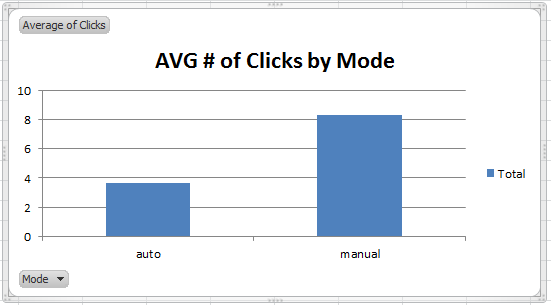

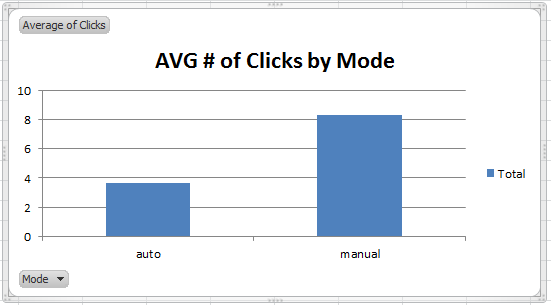

Next, if you're interested in whether automating your Twitter stream will increase your followers, take this next graph into account:

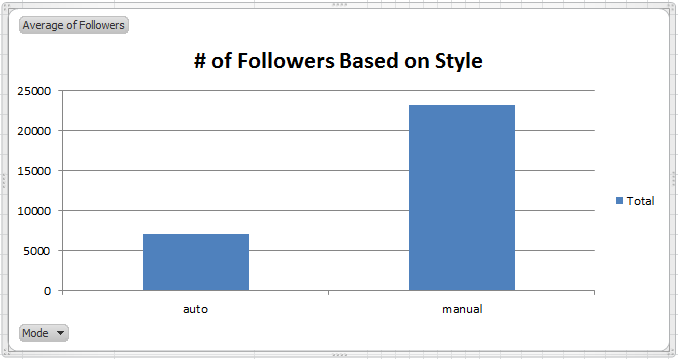

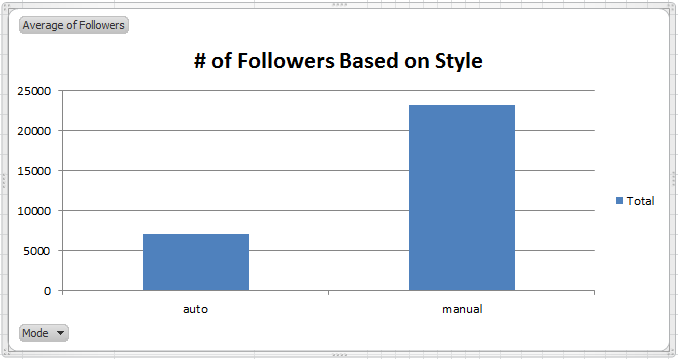

Key learning: Less automation = more followers (All data gathered from Triberr - The Reach Multiplier) How can I build my author trustrank with the search engines? Here are some ways to benchmark and build your author presence in the eyes of the search engines: Author microformats - if you own a website, you most definitely should implement the new rel=author microformat, validating through Google Plus. This is a fantastic way to directly claim your content to the search engines. Here is how to do implement it on Wordpress (via Joost de Valk) and here is the official Google page on authorship. Klout Topics- Since we were talking about topical trustrank earlier as well, you might want an idea of which topics the search engines might consider you authoritative about. I think that Klout Topics is a good place to start. Gravatar - Ross Hudgens wrote a great post a few months ago called Generating Static Force Multipliers for Great Content wherein he talked about the importance of a consistent personal brand and image across the Internet. If you have the same photo across many different sites, how could the search engines not use this in determining trustworthiness? KnowEm is a website where you can find if your username has been taken across many different social networks. This is a great place to go to learn where you need to sign up to protect your username, and therefore your personal brand and author trust. Conclusion Author authority has long been a topic of discussion in SEO circles and we've wondered "Does Google have an author rank?" From these patents, I think it is obvious that they have the capability, and especially now with Google Plus for Google, and Facebook for Bing, both are going to be making this even more of a priority. I'd love to hear your thoughts. Do you like this post? Yes No  |

| Keyword Metrics for SEO (And Driving Actions from Data) - Whiteboard Friday Posted: 15 Sep 2011 02:02 PM PDT Posted by Aaron Wheeler We all have access to a lot of great metrics surrounding keywords we research and target, including data from search engines themselves, analytics suites, and other third-party companies (ourselves included). It's one thing to gather and parse this data, but it's a whole 'nother thing to actually act on that data to drive traffic. On this week's Whiteboard Friday, Rand illustrates some ways to take the keyword metrics you've garnered and turn them into visits to your sites. Have any tips on interpreting and acting on keyword metrics? Let us know in the comments below! Video Transcription Howdy SEOmoz fans! Welcome to another edition of Whiteboard Friday, This week, we're talking about some keyword metrics for SEO and how you can drive action from the data that you collect.

Now, some of this data is in the SEOmoz platform. A lot of it is stuff that we haven't added in or that you might be calculating manually yourself. Certainly, all of it is available from your web analytics tool, Google Analytics or Omniture or Webtrends, whatever you are using, and from doing some specific analysis yourself, maybe in Excel or something like that. What I want to help you do here is take all of this information, all of these data points that you are collecting about the keywords that are driving traffic to your site and be able to use them to take smart actions. Collecting metrics is fine. It's good to have analytics, good to have data. But it is useless unless you do something with it, right? I mean, why bother looking at the number of or the percentage of market share of search engines unless you're going to actually take action based on those things. So, let's dive in here and take a look real quick.

The first one that I want to talk about is search engines versus market share. This is things like, okay, I am getting 84% of my traffic from Google, 8% from Bing, 6% from Yahoo. This is fine, and it is something that you might want to measure over time. Over time if you see that your shares are increasing or decreasing, you might take some action. But the thing that you really want to do is compare it against the market averages. I will have Aaron put in the Whiteboard Friday post a link to I think it is StatCounter, who has some great metrics around what these average market shares are for most websites and referring traffic.

Key important point - do not, do not use comScore data. If you use comScore data, what you will see is something like Google has 65% market share, Bing has 14% market share, Yahoo has 20% market share. No, no, no, no. Everyone's website shows that they are getting between, let's say, 80% and 90% of their search traffic from Google. So, if Google's market share is 65%, how is this possible? Well, the reason is because, remember that comScore is counting all searches on the Microsoft networks and on Yahoo's network. Yahoo has a huge content network. Microsoft has billions of web pages. Any searches performed internally on those sites count towards the search share, versus Google, who basically has very few actual web properties, media properties, content properties, so all the searches go offsite to somewhere else. Very few of these searches stay on Google verus Bing and Yahoo where a large percentage of the searches that are performed end up back on Microsoft or Yahoo properties.

The action that you take once you see one of these is if your market share numbers are way off and you think they are way off for your sector, so you could use some scoring against some competition. You could use something like Hitwise to determine that. You would want to say, "Oh, you know what? We are low on Bing. We are going to expend extra effort to try and get traffic from Bing." The other thing that I'd highly recommend that you put in here along with this column is . . . so, search engine, market share, you versus the average market shares, and then you also want to have something like a conversions data. Throughout this talk, throughout this quick Whiteboard Friday, some conversions, I've 3 here, I have 6 there, I have 9, I have 10, whatever it is. I want to say that I know conversion is one of those metrics that is tough to gauge, especially for a lot of B2B folks or folks who are driving traffic to their site and then they have offline conversions, conversions via phone, all of these kinds of things. So, driving at what is the actual conversion value of these kinds of things is very tough.

If you are in a pinch and you want a metric that suggests, infers conversion, infers value, I really love using browse rate. So, browse rate . . . I'm going to come over here for a sec. Browse rate is essentially a simple metric that will say something like 1.7, which means that on average when somebody visited a web page of yours, visit one on my web pages, they browse to let's say 1.7 other pages, meaning that if 100 visits come, I will probably get 170 page views from those 100 visits that land on this page that come from that search engine, that come from that source. So, do you get what I am saying? Basically what's nice about browse rate is it exists for every source that you could possibly think of, and it is there by default. You don't need to add a conversion action. It strongly suggests, strongly infers usually, that you will get more value from that visitor.

Browse rate is a great sort of substitute if for some reason you can't get conversions. If you can get conversions, by all means put them in. Add action tracking. You should have that in your web analytics. But if for some reason you can't, browse rate is a good substitute. It's a great way to substitute if you are looking at traffic sources that aren't conversion heavy but you still want to compare the value against them.

Let's look at keyword distribution. A lot of people like to break out keyword distribution in a number of formats. Keyword distribution is a common metric. Folks will say, "Oh, how much traffic, how many keywords are coming from the head of the demand curve, middle of demand curve, tail of the demand curve, or long tail?" I actually like using four breakouts. So, what I do is, like, head is more than 500 searches per month in terms of volume. Middle would be like 50 to 499 searches. Tail would be less than 50, and then long tail would be, like, there is no volume report. Google AdWords tool or whatever your data is pulling says, "Boy, you know what? There is nothing from here."

Again, these are frustrating metrics to get. If you need to get them, what I would recommend is use a combination of your rank, so if you are tracking with SEOmoz or you are using the SEO Book Rank Tracker, or you're using some profession enterprise level thing like Conductor or BrightEdge or Covario or Rank Above or Search Metrics, those kinds of things, you want your rank, and then you want the number of visits. Essentially, what you get if you put these together is you can get a sense of, oh, you know what, this drove quite a bit of traffic, but my ranking wasn't that high. Therefore I am inferring that this is a head term. Or, oh, you know what, my visits were quite low even though my ranking was quite high. Therefore I am inferring this is a tail term. Hopefully in the future someone will make some spiffy software to do that. Let me think about that. So, once you have these distributions, you can see the number of keywords that are in each of these buckets, the number of visits that they send, the average ranking of those keywords. So again, you can calculate that inside your Moz web app or a number of these other apps, and conversion actions that you drive from these.

What's great about this is this really let's you see, like, "Hey, hang on a second. You know what? I spend all my time worrying about my rankings for these 45 keywords. The ones that are in my head, that are driving most of my traffic. My average ranking is #8, so I still have a lot of room to move. But if I look at my conversion actions across these, I think that the biggest opportunity might actually be, maybe it is here in the tail, or even in the very long tail." Then I would think to myself, boy, I need to stop spending a bunch of time worrying about these few keywords that I manage and try to get rankings up on the vanity keywords. I need to think a lot more about the value of tail, because for every keyword that I can capture here, the number of conversion actions I can get from that is dramatically higher. When you see metrics like this in your analytics this really drives you to be a different kind of marketer. To think to yourself, "All right. How do I get more user generated content? How can I produce more editorial content? Can I license content from somewhere? Can I syndicate out my content some places and get more people contributing back to me? Content is clearly driving a ton of value, and I need to do more content marketing."

Likewise, I think this is actually coming really soon in the Moz web app, but I highly urge you to segment branded versus unbranded keyword terms. This is incredibly important because if branded is driving all of your value, then SEO is not really working for you other than to capture keywords that you would almost certainly otherwise capture. When unbranded is capturing a lot of value, that's really your sort of SEO work capturing people early in the funnel in the discovery process before they know about your brand. So, breaking out branded from unbranded really lets you see how well the efforts of your managed SEO efforts are going.

Likewise, branded does let you see how well efforts like branding and market share and mindshare among consumers or mindshare among business if you're B2B is going. If you see these metrics trending up or down, particularly if you see branded trending down, you might think to yourself, boy, we need to get out there more. We need to get more exposure. People are losing excitement or interest in our brand. If you see unbranded going down, you might be thinking to yourself, well, you know what, either we're losing out in competitive rankings or we're losing out in the long tail. Then you can look up here into the keyword distribution curve and figure out where that is happening and why you are losing or gaining. This is very important from an analytic standpoint. It is also important from an action- driving standpoint. It can tell you what to do, what to concentrate on, what to worry about and not, where campaigns are going well and not. If your branding is going badly, you might want to talk to whoever is running your brand advertising. That's probably in their wheelhouse.

The next thing I like to look at, top keywords from a keyword metric standpoint. So, you've your top keywords here, and you probably would have a big list of keywords. In the Moz web app, I keep track of around 75 to 80. I could have up to 300 in there. I do searches. You can do this just using your Google Analytics, which is free. So I might look at the number of searches that were generated. This is going to have to come from AdWords, which is frustrating, but if you can do it for your managed keywords, it is very nice to have that number in there. I might look at visits, conversions, average rank, and if you want, I would add one more here and look at, I like adding keyword difficulty and looking at that. The reason is I can really get a sense of which keywords are going to be easiest for me. If I see this keyword has 4 conversions, 2 conversions, 1 conversion, but if I see something like, oh, and there is only 15 searches, I am ranking #1, there is not a lot of opportunity there. But I see here, oh, I have a 1% conversion rate. If I work on conversion here, and lets' say the difficulty, I don't know, this is 55 and this is 48 and this is 72. So, 72, that's going to be super hard. That's going to be very challenging to increase this. There is not a ton of search volume. I am already capturing some of it. This one looks really good to me. That looks like where I would focus some more actual effort on a single keyword, and that's exactly what this list is designed to let you do, to look at conversions against visits and search volume and difficulty and say, "Hey, I have opportunity. You know what? I'd rank a little better if I can push that up to #3, even 3.5. It's going to really help my business. I am going to capture more customers."

The last place I look from a keyword metric, it's technically not a keyword metric, but I think it is very, very important because of how it captures keywords and encapsulates this data, is top content. This is essentially like, oh, all right, here is my home page. Maybe that's my landing page for product A and this is product B. I can see how many visits from search that I am getting on these. I can calculate the number of conversions on those. I look at the number of keywords that are sending business to these pages, and this really helps to tell me the content that I have produced in the past, how successful is that? Going forward, it informs me what content should I keep creating. For product stuff, great. But when you are doing content marketing, if you're running a blog, you're doing video, you're producing articles, you're syndicating or licensing stuff, you're having guest writers post things for you, you're doing user generated content, finding out the value of that stuff is amazing. Being able to know which content drove the right kinds of actions and is driving lots of different keywords, long tail stuff, head stuff, whatever it is, lots of conversions, lots of visits from search. You might want to segment by the way so that this is conversions, specifically search conversions, so that you are not getting, for example, something mixed up where these are people coming from other sources to these pages and then converting. You actually want to know for the visitors from search how many conversions do you get on these. Having this information is going to be great for analyzing previous content and knowing what types of content to produce.

So, what's great about the analytics process is it makes an SEO a much more data driven marketer. I look out in the world, and I am sure you do this too, and you see, boy, there is the ad team over there, or there is the paid marketing team over there and they're doing sponsorships of events and they're doing branding plays. They're doing television advertising and radio and print, magazines and newspapers. They're not held to kind of these strict standards. A lot of that stuff is uncapturable. The beautiful thing about SEO is how measurable this process is and how much you can do to improve it. When you start to get these metrics up and running, when you start doing this analytics process, oh man, huge super win. Just awesome.

All right, everyone. Take care. I hope you have a great week. We'll see you again for another edition of Whiteboard Friday. Video transcription by Speechpad.com Do you like this post? Yes No  |