Posted by Cyrus Shepard

In 2003, engineers at Google filed a patent that would rock the SEO world. Named Document Scoring Based on Document Content Update, the patent not only offered insight into the mind of the world’s largest search engine, but provided an accurate roadmap of the path Google would take for years to come.

In his series on the 10 most important search patents of all time, Bill Slawski shows how this patent spawned many child patents. These are often near-duplicate patents with slightly modified passages – the latest discovered as recently as October 2011. Many of the algorithmic changes we see today are simply improvements of these original ideas conceived years ago by Google engineers.

One of these recent updates was Google’s Freshness Update, which places greater emphasis on returning fresher web content for certain queries. Exactly how Google determines freshness was brilliantly explored by Justin Briggs in his analysis of original Google patents. Justin deserves a lot of credit for bringing this analysis to light and helping to inspire this post.

Although the recent Freshness Update received a lot of attention, in truth Google has scored content based on freshness for years.

How Google Scores Fresh Content

Google Fellow Amit Singhal explains that “Different searches have different freshness needs.”

The implication is that Google measures all of your documents for freshness, then scores each page according to the type of search query. While some queries need fresh content, Google still uses old content for other queries (more on this later.)

Singhal describes the types of keyword searches most likely to require fresh content:

- Recent events or hot topics: “occupy oakland protest” “nba lockout”

- Regularly recurring events: “NFL scores” “dancing with the stars” “exxon earnings”

- Frequent updates: “best slr cameras” “subaru impreza reviews”

Google’s patents offer incredible insight as to how web content can be evaluated using freshness signals, and rankings of that content adjusted accordingly.

Understand that these are not hard and fast rules, but rather theories consistent with patent filings, experiences of other SEOs, and experiments performed over the years. Nothing substitutes for direct experience, so use your best judgement and feel free to perform your own experiments based on the information below.

Images courtesy of my favorite graphic designer, Dawn Shepard.

1. Freshness by Inception Date

A webpage is given a “freshness” score based on its inception date, which decays over time. This freshness score can boost a piece of content for certain search queries, but degrades as the content becomes older.

The inception date is often when Google first becomes aware of the document, such as when Googlebot first indexes a document or discovers a link to it.

"For some queries, older documents may be more favorable than newer ones. As a result, it may be beneficial to adjust the score of a document based on the difference (in age) from the average age of the result set."

- All quotes from US Patent Application Document Scoring Based on Document Content Update

2. Document Changes (How Much) Influences Freshness

The age of a webpage or domain isn’t the only freshness factor. Search engines can score regularly updated content for freshness differently from content that doesn’t change. In this case, the amount of change on your webpage plays a role.

For example, the change of a single sentence won’t have as big of a freshness impact as a large change to the main body text.

"Also, a document having a relatively large amount of its content updated over time might be scored differently than a document having a relatively small amount of its content updated over time."

3. The Rate of Document Change (How Often) Impacts Freshness

Content that changes more often is scored differently than content that only changes every few years. In this case, consider the homepage of the New York Times, which updates every day and has a high degree of change.

"For example, a document whose content is edited often may be scored differently than a document whose content remains static over time. Also, a document having a relatively large amount of its content updated over time might be scored differently than a document having a relatively small amount of its content updated over time."

4. Freshness Influenced by New Page Creation

Instead of revising individual pages, websites add completely new pages over time. This is the case with most blogs. Websites that add new pages at a higher rate may earn a higher freshness score than sites that add content less frequently.

Some SEOs insist you should add 20-30% new pages to your site every year. This provides the opportunity to create fresh, relevant content, although you shouldn’t neglect your old content if it needs attention.

"UA may also be determined as a function of one or more factors, such as the number of “new” or unique pages associated with a document over a period of time. Another factor might include the ratio of the number of new or unique pages associated with a document over a period of time versus the total number of pages associated with that document."

5. Changes to Important Content Matter More

Changes made in “important” areas of a document will signal freshness differently than changes made in less important content. Less important content includes navigation, advertisements, and content well below the fold. Important content is generally in the main body text above the fold.

"…content deemed to be unimportant if updated/changed, such as Javascript, comments, advertisements, navigational elements, boilerplate material, or date/time tags, may be given relatively little weight or even ignored altogether when determining UA."

6. Rate of New Link Growth Signals Freshness

If a webpage sees an increase in its link growth rate, this could indicate a signal of relevance to search engines. For example, if folks start linking to your personal website because you are about to get married, your site could be deemed more relevant and fresh (as far as this current event goes.)

That said, an unusual increase in linking activity can also indicate spam or manipulative link building techniques. Be careful, as engines are likely to devalue such behavior.

"…a downward trend in the number or rate of new links (e.g., based on a comparison of the number or rate of new links in a recent time period versus an older time period) over time could signal to search engine 125 that a document is stale, in which case search engine 125 may decrease the document’s score."

7. Links from Fresh Sites Pass Fresh Value

Links from sites that have a high freshness score themselves can raise the freshness score of the sites they link to.

For example, if you obtain a link off an old, static site that hasn’t been updated in years, this doesn't pass the same level of freshness value as a link from a fresh page – for example, the homepage of Wired.com. Justin Briggs coined this FreshRank.

"Document S may be considered fresh if n% of the links to S are fresh or if the documents containing forward links to S are considered fresh."

8. Changes in Anchor Text Signals may Devalue Links

If a website changes dramatically over time, it makes sense that any new anchor text pointing to the page will change as well.

For example, If you buy a domain about automobiles, then change the format to content about baking, over time your new incoming anchor text will shift from cars to cookies.

In this instance, Google might determine that your site has changed so much that the old anchor text is no longer relevant, and devalue those older links entirely.

"The date of appearance/change of the document pointed to by the link may be a good indicator of the freshness of the anchor text based on the theory that good anchor text may go unchanged when a document gets updated if it is still relevant and good."

9. User Behavior Indicates Freshness

What happens when your once wonderful content becomes old and outdated? For example, your website hosts a local bus schedule... for 2009. As content becomes outdated, folks spend less time on your site. They press the back button to Google's results and choose another url.

Google picks up on these user behavior metrics and scores your content accordingly.

"If a document is returned for a certain query and over time, or within a given time window, users spend either more or less time on average on the document given the same or similar query, then this may be used as an indication that the document is fresh or stale, respectively."

10. Older Documents Still Win Certain Queries

Google understands the newest result isn’t always the best. Consider a search query for “Magna Carta". An older, authoritative result is probably best here. In this case, having a well-aged document may actually help you.

Google’s patent suggests they determine the freshness requirement for a query based on the average age of documents returned for the query.

.jpg)

"For some queries, documents with content that has not recently changed may be more favorable than documents with content that has recently changed. As a result, it may be beneficial to adjust the score of a document based on the difference from the average date-of-change of the result set."

Conclusion

The goal of a search engine is to return the most relevant results to users. For your part, this requires an honest assessment of your own content. What part of your site would benefit most from freshness?

Old content that exists simply to generate pageviews, but accomplishes little else, does more harm than good for the web. On the other hand, great content that continually answers a user's query may remain fresh forever.

Be fresh. Be relevant. Most important, be useful.

Do you like this post? Yes No

.jpg)

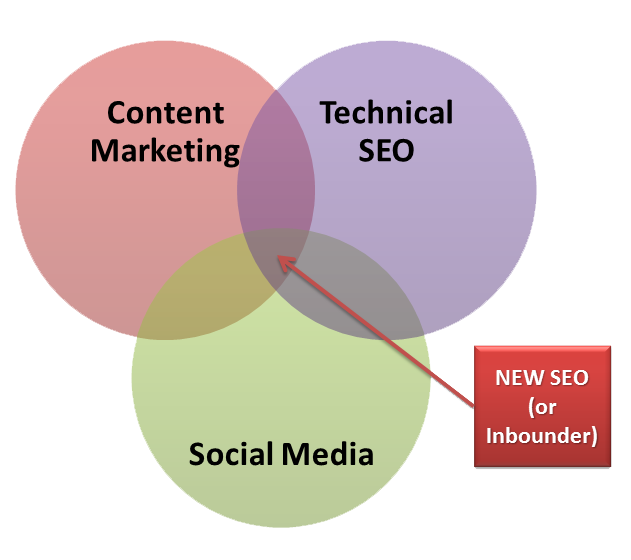

I must admit that lately Google is the cause of my headaches.

I must admit that lately Google is the cause of my headaches.

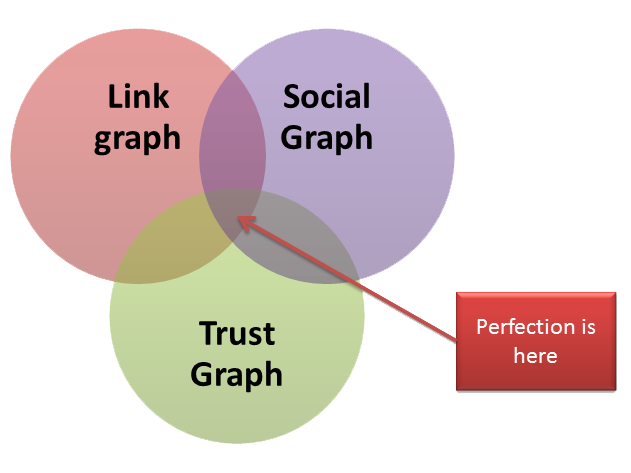

The decline of Link graph

The decline of Link graph